LLM Test Bot and Feedback Framework

Business Opportunity

The primary objective of the LLM Test Bot and Feedback projects is to develop an AI-powered chatbot that learns and improves its performance based on the content provided by users. By continuously refining its knowledge base and understanding, the chatbot aims to deliver accurate and helpful responses to user queries, thereby enhancing user satisfaction and driving business growth. This presents a significant opportunity for businesses to leverage AI technology to improve customer service and engagement.

Solution / Approach

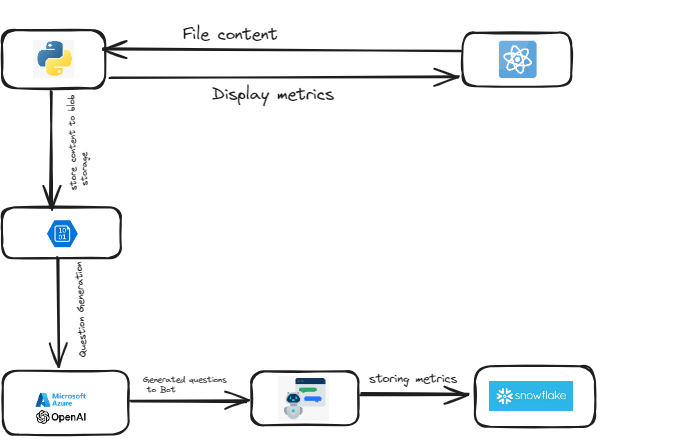

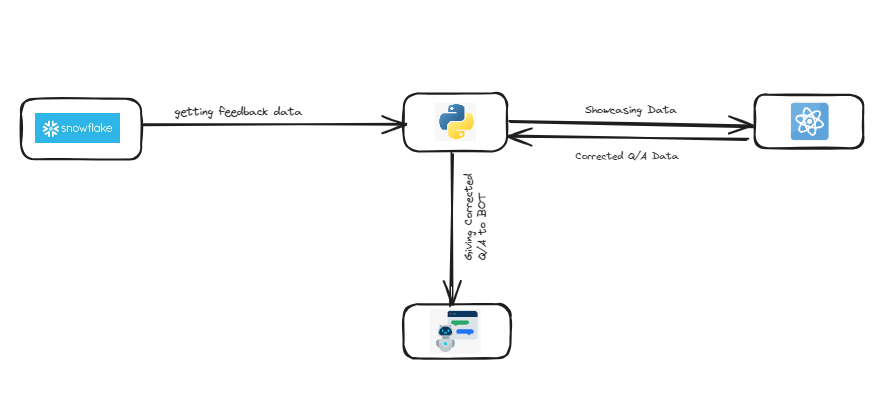

The LLM Test Bot and Feedback system utilizes Azure services to enhance its backend capabilities. The Test Bot project focuses on generating questions based on the content uploaded by users, while the LLM Feedback project collects data on unanswered cases and disliked questions. The combined insights from these projects enable the development of a more effective and user-friendly chatbot.

the Test Bot, which creates questions based on user content, and the Feedback module, which gathers valuable insights from user interactions. The data from the CAIP table of KC Bot (unanswered cases and disliked questions) is fetched and displayed in the user interface, allowing users to validate and provide correct answers to the questions. This iterative process helps to refine the chatbot's knowledge base and improve its performance over time, creating a more effective and personalized user experience.

Architecture

Key Metrics

- Some of the critical metrics for evaluating the success of the LLM Test Bot and Feedback projects include:

- Total number of questions generated: This metric indicates the extent to which the Test Bot is creating relevant questions based on user content.

- Average response time: The mean time taken by the chatbot to respond to individual user queries, providing insights into its responsiveness and performance.

- Number of questions answered: This metric reflects the chatbot's ability to provide accurate and useful answers to user queries.

- User satisfaction: Measuring user satisfaction with the chatbot's responses is essential for determining the overall success of the system and identifying areas for improvement.

- Answer validation: Tracking the number of corrected answers in the feedback process helps assess the quality of the chatbot's knowledge base and its learning capabilities.

Tech Stack

- React JS

- Flask

- Azure

- Snowflake

- Open AI

Resources Links

Feedback

We appreciate your feedback! Please provide us with any suggestions or improvements you have for our product.Please provide feedback on this product by clicking the following Link: